Snap plans to roll out a watermarking feature that will help you better distinguish real images from those generated by AI tools. On Tuesday, the mobile messaging company announced that Snapchat will add a watermark to images created using Snap's generative AI tools.

The watermark, depicted as a small ghost logo with the familiar sparkle icon, will appear on any such images exported, downloaded, or saved to the camera roll. Anyone who receives the image will see the logo and icon, both in Snapchat and outside of the platform.

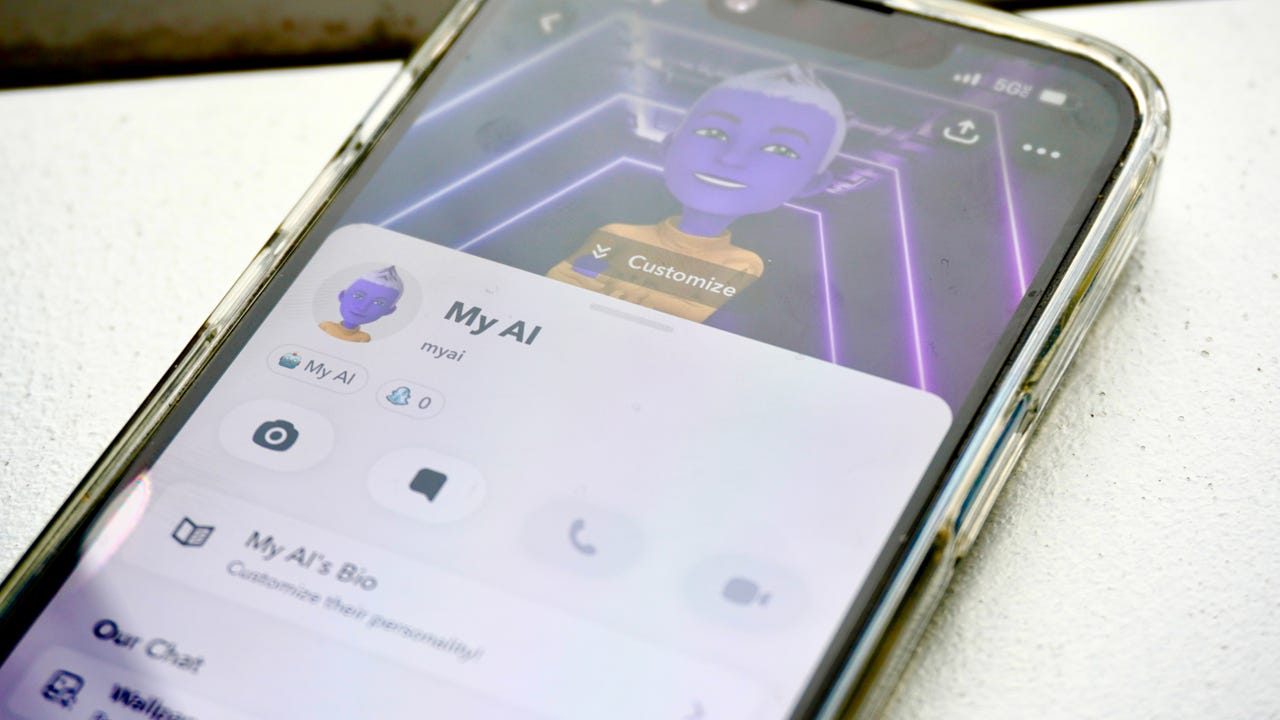

In recent years, Snap has debuted a variety of AI-powered tools. You can use AI to generate wallpapers to spice up your conversations, transform your face with freaky lenses, place yourself in different scenes via Snapchat Dreams, chat with the My AI chatbot, and even create a virtual pet.

The dilemma here is that people can easily be tricked into believing that an AI-generated image is real. Sure, if I share a photo taken with a filter that makes me look like an alien or an octopus, the other person knows it's not real (unless they believe in aliens or talking sea creatures). In other cases, AI tools can make something look quite legitimate.

Also: Snapchat AI gets even weirder: Introducing AI pets

Snap already uses a few tricks to tag certain AI-generated items. Contextual icons, symbols, and labels appear when you interact with a Snapchat feature powered by AI. A Dreams image cooked up by AI will display a context card with more details. An image that uses the Extend tool to appear more zoomed out will add the sparkle icon to denote that it's AI generated.

Since AI is used and abused for political reasons, Snap also noted that its employees vet political ads for any misleading use of content. In particular, they look for signs that AI was employed to cook up a deceptive image.

In its news release, Snap explained that the company also tries to find and test for possible flaws in its own AI models. Snap uses red-teaming, a process in which internal or external people pretend to be hackers and attackers looking to exploit vulnerabilities or sneak past safeguards in generative AI models. In Snap's case, red teamers look for potentially biased AI results and content.

"We believe in the tremendous potential for AI technology to improve our community's ability to express themselves and connect with one another," Snap said it its release, "and we're committed to continuing to improve upon these safety and transparency protocols."

Hot Tags :

Innovation

Hot Tags :

Innovation