Microsoft has been touting its Windows Recall AI feature as a must-have for anyone who wants to remember an old webpage or message. But a new reveal suggests it may also come with its fair share of security problems.

Ethical hacker Alex Hagenah has launched a tool, called TotalRecall, that shows how anyone with enough know-how and the right tools could steal the recalls saved on a Windows machine and access that data, encryption-free, on a target device. According to Hagenah, whose work was reported on earlier by Wired, he analyzed Windows Recall and found that the tool -- which takes screen captures of a Windows machine every five seconds -- stores the data completely unencrypted on the user's computer.

Also: 5 ways to save your Windows 10 PC in 2025 - and most are free

"TotalRecall copies the databases and screenshots and then parses the database for potentially interesting artifacts," Hagenah wrote in a GitHub posting about TotalRecall. "You can define dates to limit the extraction as well as search for strings (that were extracted via Recall OCR) of interest. There is no rocket science behind all this."

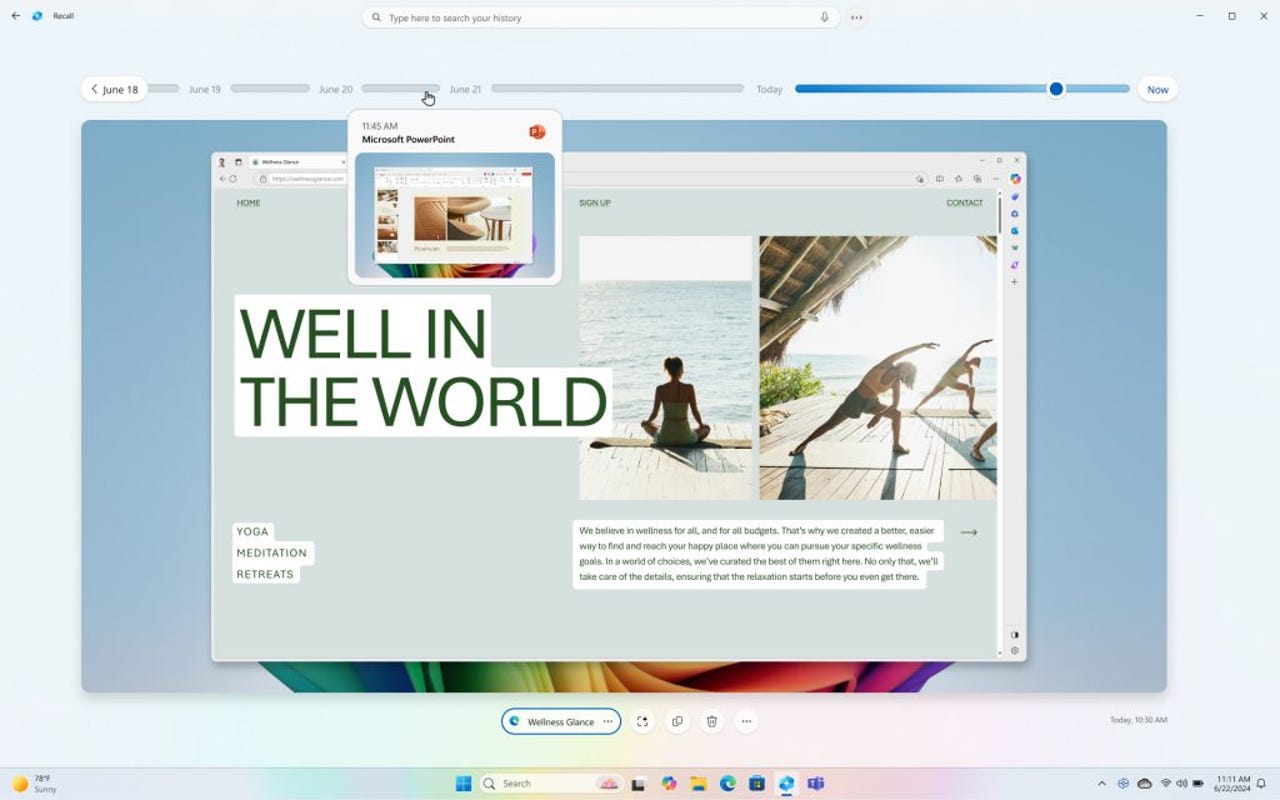

Microsoft unveiled the Windows Recall feature last month, touting it as the next iteration of using artificial intelligence (AI) to remember what you do on a PC. The feature captures a copy of the PC every five seconds and can be queried for information, including messages previously sent, conversations with friends, and even a recipe that users may have accessed a week prior. Microsoft said the feature would save users time and make the experience of using Windows 11 far more efficient.

Also: The best VPN services of 2024: Expert tested and reviewed

Microsoft also touted how the captures would be stored on the device, so data wouldn't be transferred to the cloud, preserving security. Indeed, a Windows 11 machine's entire hard drive is encrypted when a user isn't logged into their system account. However, when the user does log in, the entire drive is decrypted, making information -- including Recalls -- available to the user and readily accessible to bad actors who want to steal the data.

TotalRecall is designed to run on a target PC and automatically locate where Recall snapshots are located. The tool can then set a date range for analyzing data or look at what happened on a person's computer at a specific time. While it hasn't been exploited in the wild -- Recall AI hasn't launched yet, after all -- it could pave the way for hackers with know-how or even domestic abusers to run a version of TotalRecall on a machine surreptitiously to monitor and steal sensitive information, conversations, emails, and more.

Also: Is Microsoft Recall a 'privacy nightmare'? 7 reasons you can stop worrying about it

For its part, Microsoft, which hasn't responded to a request for comment, has been informed by security researchers about the possible risks of allowing Recall to operate in this fashion. And while the company hasn't said if it will change anything, it did say on a support page about Recall that the feature could be turned off in Windows, effectively rendering any exploit inert.

It's also worth noting that TotalRecall was developed for a prerelease version of Windows 11. In some cases, those prerelease versions are configured differently than final builds and Microsoft may be bringing additional security features to Recalls that it didn't deliver in the prerelease version.

Even so, time is running out for Microsoft to do something if the Recall exploit is as big of a concern as Hagenah would suggest: the service launches on Windows on June 18.

Updated at 12:15 p.m. ETto include more detail on how Recall stores data and the possibility of a final version of the software launching with more security protections.

Hot Tags :

Tech

Hot Tags :

Tech