Podcasts are at their peak of popularity, with 504.9 million listeners worldwide, the most listeners since 2019, according to a report. To optimize the podcast listening experience, Apple is now introducing a new feature to Apple Podcasts -- transcriptions.

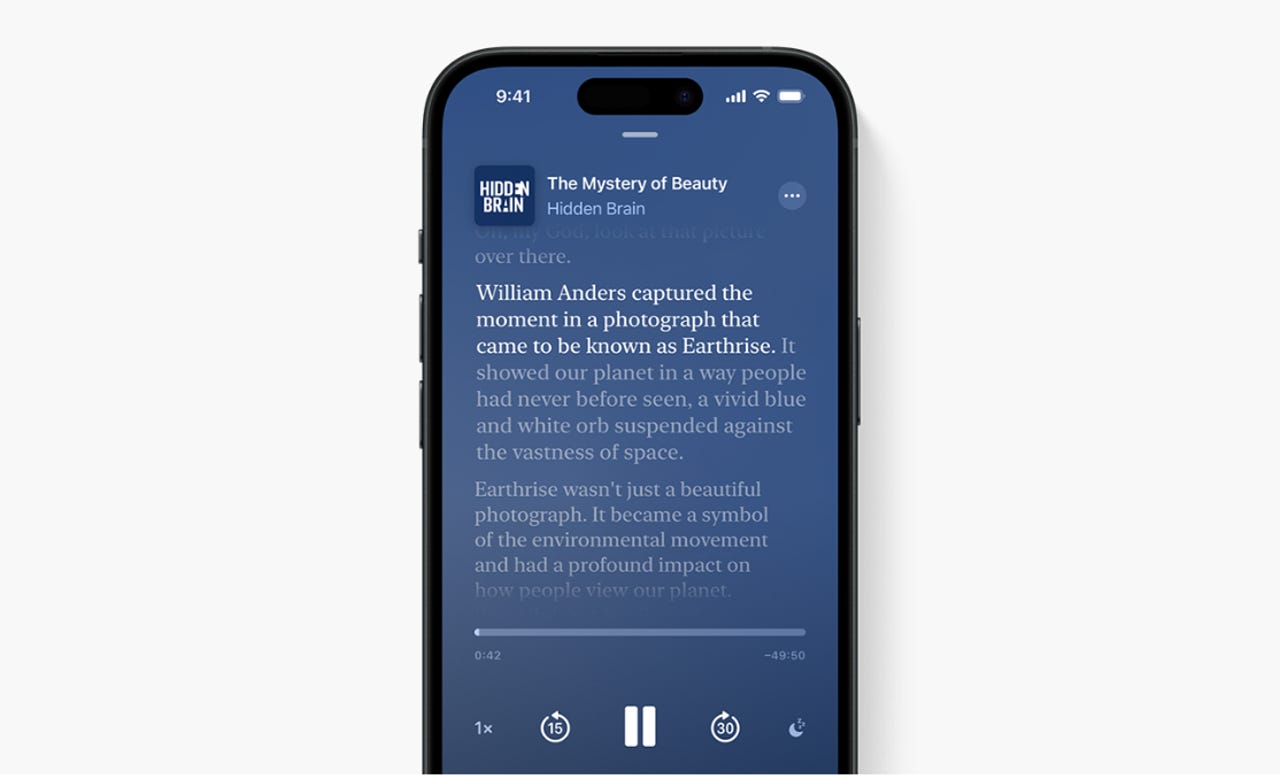

The podcast transcriptions will work very similarly to the lyrics transcriptions in Apple Music, giving users access to complete transcriptions, word highlighting as the podcast plays to make it easy to follow along, and the ability to click on text to start playing from that point on.

Also: 5 top mobile trends in 2024: On-device AI, the 'new' smartphone, and more

An additional feature for podcast transcriptions is that you will also be able to search the episode for a specific word or phrase.

Apple will automatically generate these transcripts after a new episode is published, meaning that the transcriptions for your podcast will be delayed until shortly after it has been published.

Creators will also be able to make changes to the transcript or submit their own on their Apple Podcasts Connect page, the managing page for Apple Podcast creators.

This feature was primarily implemented to increase the accessibility of podcasts. Apple shared, "Apple is introducing transcripts on Apple Podcasts, making it easier for anyone to access podcasts." Not only is the feature an accessibility win, but it's also helpful for podcast fans in general.

Also: Apple OKs sideloading apps in the EU - with these restrictions

For example, no matter how closely you are paying attention to the podcast you are listening to, oftentimes you miss a word or a sentence, and until now, the only option has been scrubbing back to give the part a relisten. Now, you can look at the transcript to see what you missed.

The feature is coming in the spring and will be available with iOS 17.4 for podcasts in English, French, German, and Spanish.

Hot Tags :

Home & Office

Hot Tags :

Home & Office