This is the third blog in my "Future of Hardware" series that looks at how key industry trends and emerging innovation are impacting computing system design and operations. The first blog examined exponential growth in computing performance while the second examined the trend toward disaggregating computing componentry. This blog looks at recent advancements in cooling technology, specifically advances in liquid cooling for compute hardware.

Walk into any modern data center and the first thing you're likely to notice is not what you see but what you hear -the unmistakable cacophony of chillers and fans all whirring together to keep the ambient temperatures as cool as possible. But what you're not likely to see at-a-glance are many other fans that are internal to compute, network, and storage systems also working hard to keep their many different component parts even cooler.

There's a simple reason for this. Like any machine (including your car), computers and their many components do not operate well when overheated. This is why all computers and servers today are designed with some internal cooling mechanism (most likely multiple fans) and room for airflow to keep internal ambient temperatures not to exceed 115 degrees Fahrenheit.

Air cooling has been around since the inception of computers as evidenced by air-conditioned systems that were a must-have to take heat away from vacuum tubes in the earliest models. With the advent of dense integrated circuitry, the experimentation on cooling technologies progressed with better air cooling but also through a variety of alternative tech including liquid (water) cooling methods. But air cooling has been the most dominant cooling technology by a wide margin since the heyday of client/server computing with liquid cooling largely relegated to high-performance computing and other more specialized systems.

But this may all be about to change...

The reasons for renewed focus and interest in non-air-cooling technologies are in two previous blogs of my "Future of Hardware" series. First, I wrote about the rapid growth of computing performance metrics such as chip/socket density, memory & storage capacity, and associated power requirements. Second, I discussed the trend toward disaggregation of computing components (specifically memory), which will likely lead to the development of more processing-intensive computing systems. In turn, these next-gen systems will require more heat dissipation that traditional fans and airflow may not be properly able to handle.

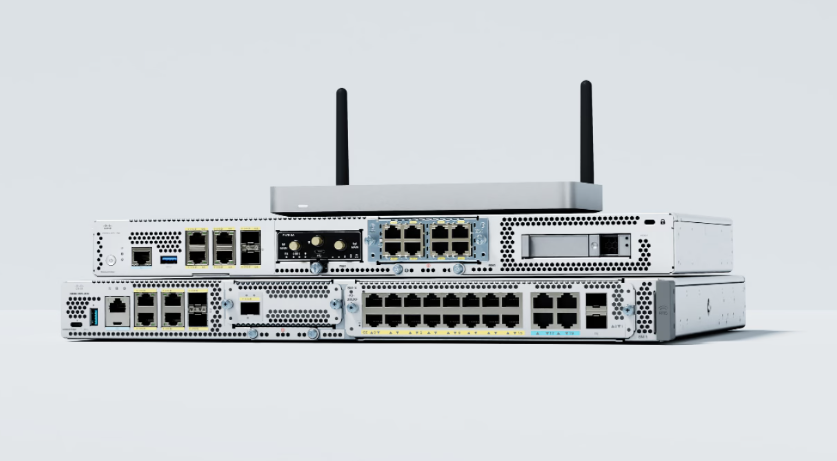

This is one of the reasons for all the buzz within the computing and data center industry on advanced cooling technologies such as microchannel and a host of liquid cooling computing techniques. The idea is that with better cooling, computers can be that much more powerful that can lead to accelerated innovations. In many ways, this is a holy grail search within the computing industry and many companies including Cisco are actively involved.

I'll focus the rest of this blog on liquid cooling, which Cisco has been looking evaluating closely in conjunction with our suppliers. In time, I predict that liquid cooling will evolve from largely being a domain of homemade PC enthusiasts to a mainstream technology found in data centers everywhere. There are still a lot of details to be worked out including which liquid cooling technique may win out (think VHS vs. Beta or HD-DVD vs. Blu Ray).

In the mix are technologies such as single and two-phased immersion, which cools by literally submerging computing componentry into liquids. Another technology is single and two-phased cold plate-based liquid cooling, which transfers heat through a connected heat exchange system cooled by moving liquid through it.

Irrespective of which technology may rule supreme, there are many compelling reasons for adopting liquid cooling in general. Here are a few:

These developments are nothing but good news for hardware designers including those of us working on the Cisco UCS. When we launched the X-Series, we touted this as a system built for the next decade of computing design and operations. This includes the ability to integrate new and emerging technology including better cooling systems, specifically liquid cooling. A couple of the more interesting projects in flight are:

While these projects are experimental for now, I do foresee them becoming mainstream soon. As with most things involving computing technology, there will be a measured, phased evolution in terms of innovation and adoption. Specifically, I expect data center chillers to remain in place for a while even as rack based CDUs are installed. Over time, CDUs themselves will evolve and expand as centralized units at the data center level (like the evolution of power distribution systems) that will revolutionize how data centers are designed and populated. Eventually, data center operators will consider even more extreme liquid cooling methods including full system immersion.

The downstream and ancillary effects can be equally compelling. Not only will we be able to build more energy-efficient and environmentally friendly servers and data centers, but we will also enable the next-generation of computing solutions. This includes converged infrastructure and hyper-converged infrastructure that can run more workloads and support more apps using less energy and footprint possible today.

Stay tuned for more on this as this future of hardware is not as far into the future as you might think.

Check out my podcast interview with Data Center Knowledge

about liquid cooling and the future of computing.

Future of Hardware Blog Series:

Podcast interview on Data Center Knowledge

Hot Tags :

Featured

#ciscodcc

Cisco UCS X-Series

high performance computing

Liquid Cooling

computing performance

Hot Tags :

Featured

#ciscodcc

Cisco UCS X-Series

high performance computing

Liquid Cooling

computing performance