June Wan/

June Wan/ While ChatGPT's ability to generate human-like answers has been widely celebrated, it also is posing the biggest risk to businesses.

Also: How does ChatGPT work?

The artificial intelligence (AI) tool already is being used to enhance phishing attacks, said Jonathan Jackson, BlackBerry's Asia-Pacific director of engineering.

Pointing to activities spotted in underground forums, he said there were indication hackers were using OpenAI's ChatGPT and other AI-powered chatbots to improve impersonation attacks. They also were used to power deepfakes and spread misinformation, Jackson said in a video interview with . He added that hacker forums were offering services to leverage ChatGPT for nefarious purposes.

Also: Scammers are using AI to impersonate your loved ones. Here's what to watch out for

In a note posted last month, Check Point Technologies' threat intelligence group manager Sergey Shykevich also noted that signs were pointing to the use of ChatGPT amongst cybercriminals to speed up their code writing. In one instance, the security vendor noted that the tool was used to successfully complete an infection flow, which included creating a convincing spear-phishing email and a reserve shell that could accept commands in English.

While the attack codes developed so far remained fairly basic, Shykevich said it was simply a matter of time before more sophisticated threat actors enhanced the way they used such AI-based tools.

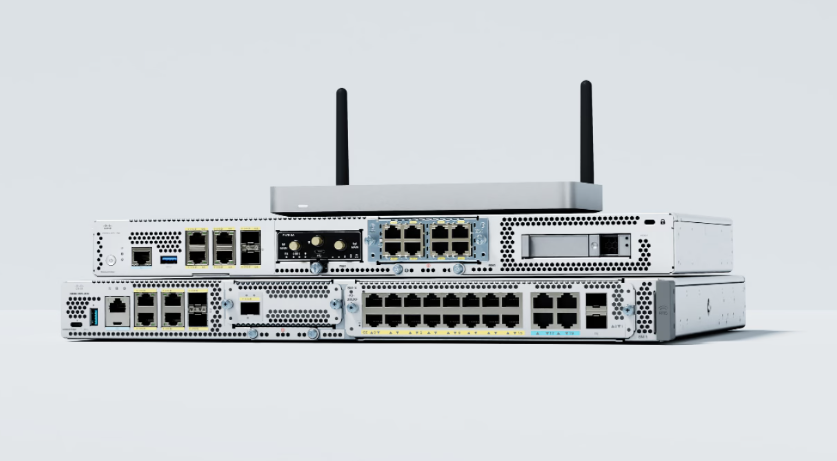

Some "side effects" will emerge from technologies that power deepfakes and ChatGPT, wrote Synopsys Software Integrity Group's principal scientist Sammy Migues, in his 2023 predictions. People who need "expert" advice or technical support on how to configure a new security device can turn to ChatGPT. They also can have the AI tool to write up crypto modules or run through years of log data to generate budget reviews.

Also: People are already trying to get ChatGPT to write malware

"The possibilities are endless," Migues said. "Sure, the AI is just a mindless automaton spewing things it has assembled, but it can be pretty convincing at first glance."

Jackson noted that the emergence of generative AI applications such as ChatGPT would drive a significant change in the cyber landscape. Security and cyber defense tools then will need to be able to identify new threats emerging as a result of large language models on which these applications are built, he said.

Also: Just how big is this new generative AI? Think internet-level disruption

This is pertinent as businesses are expecting such risks to come soon.

In Australia, 84% of IT decision makers expressed concerns of the potential threats generative AI and large language models could bring, according to a recent BlackBerry study, which polled 500 respondents in the country.

The biggest worry, amongst half of the respondents, was that the technology could help less experienced hackers improve their knowledge and develop more specialized skills.

Another 48% were concerned about ChatGPT's ability to produce more believable and legitimate looking phishing email messages; 36% saw its potential to accelerate social engineering attacks.

Also: These experts are racing to protect AI from hackers. Time is running out

Some 46% were worried about its use to spread mis- or disinformation, with 67% believing it was likely foreign nations already were using ChatGPT for malicious purposes.

Just over half, at 53%, anticipated the industry was less than a year away from seeing the first successful cyber attack powered by the AI technology, while 26% said this would happen in between one and two years, and 12% said it would take three to five years.

And while 32% felt that the technology would neither improve nor worsen cybersecurity, 24% believed it would aggravate the threat landscape. On the other hand, 40% said it could help improve cybersecurity.

Some 90% of Australian respondents believed governments had a responsibility to regulate advanced technologies, such as ChatGPT. Another 40% felt that cybersecurity tools currently were falling behind innovation in cybercrimes, with 30% noting that cybercriminals would benefit the most from ChatGPT.

Also: I'm using ChatGPT to help me fix code faster, but at what cost?

Some 60%, though, said the technology would benefit researchers the most, while 56% believed security professions could benefit most from it.

About 85% planned to invest in AI-powered cybersecurity tools over the next two years.

However, the use of AI and automation on both sides to launch as well as defend against cyber attacks is far from novelty. So why the fuss now?

Jackson acknowledged that AI had been used in cyber defense for years, but noted that the unique trait of ChatGPT and other similar tools was their ability to turn inherently complex concepts, such as coding languages, into something anyone could understand.

Also: How to use ChatGPT: Everything you need to know

Such tools run on large language models that were based on huge amounts of curated, contextual trade datasets. "It is very powerful at specific things," he noted. "ChatGPT is an incredibly powerful resource for anybody [who wants] to create good codes or, in this case, malicious codes, such as scripts to bypass a network's defense."

It also can be used to web-scrape specific individuals' social media profile to create and impersonate them for spear phishing attacks

"The biggest impact is on social engineering and impersonation," he said, adding that tools such as ChatGPT will be used to improve phishing campaigns.

With the emergence of large language models, he stressed the need then to rethink traditional approaches of cyber and data defence. He pointed to the importance of tapping AI and machine learning to combat AI-powered attacks.

Investing in AI and machine learning capabilities will help organisations identify potential threats more quickly, which is important, he said. "Using humans is no longer realistic and hasn't been for the past few years."

Also: I asked ChatGPT to write a WordPress plugin I needed. It did it in less than 5 minutes

Jackson noted that BlackBerry has been working on algorithm needed to train models on identifying changes in attack techniques and blocking malicious content that appear to be generated by large language models. Volume and velocity will be key, he added, so it can keep up with potential attacks even as ChatGPT and similar tools continue to evolve.

He further stressed that these applications had a positive impact on the industry, too. BlackBerry, for instance, is using ChatGPT for advanced threat hunting, tapping its coding capability to digest and analyze complex scripts, so it can study how these operate and enhance its defense tactics.

Hot Tags :

Tech

Security

Hot Tags :

Tech

Security