To what extent do CDN caches deep inside the ISP or the operator network also improve QoE? Does it suffice to simply deploy the CDN appliance just past the peering point? This blog post describes a project, sponsored by Cisco's Research and Open Innovation initiative, and undertaken by Prof. Sanjay Rao, his graduate student Ehab Ghabashneh at Purdue University, and that I had the privilege of advising, to explore this very question. We summarize findings here. The interested reader can find more details in the actual publication (to appear in April 2020 Proceedings of the IEEE Infocom.) Now, why is the answer to this question so significant?

Conventional wisdom among video streaming experts holds low latency delivery is important for QoE. If edge deployment of video caches conclusively improve consumer QoE, then there is a business incentive for CDN providers to establish tenancies at edge locations, including Multi-access Edge Computing sites in a 5G network. This changes the dynamics of the edge ecosystem significantly since the incentive, based on improved QoE, will create a market for content providers to seek deeper deployment CDNs.

Internet access speed appears to be a critical factor in driving Internet traffic and hence, Cisco tracks access speeds as part of the annual Cisco Visual Networking Index. When speed increases, users stream and download greater volumes of content, and adaptive streaming increases bit rates automatically according to available bandwidth. Service providers find that users with greater access bandwidth consume more traffic. By 2022, households with high-speed fiber connectivity will consume 23 percent more traffic than households connected by DSL or cable broadband, globally. The average fiber-to-the-home (FTTH) household consumed 86 GB per month in 2017 and will consume 264 GB per month by 2022.

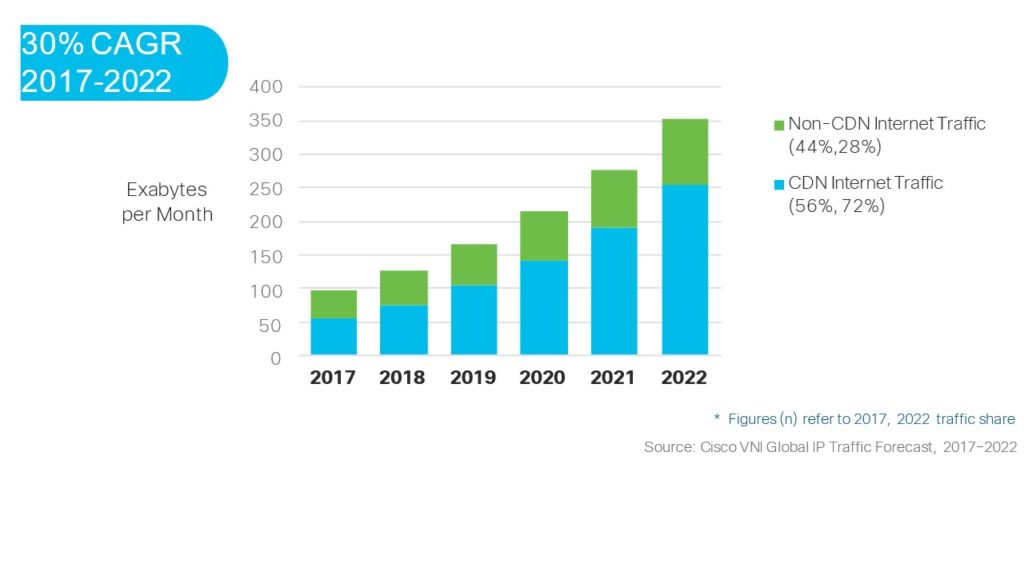

As shown in Figure 1 below, a significant percentage of the traffic is now delivered by CDNs and that fraction will only grow.

Figure 1: Annual growth in Internet traffic

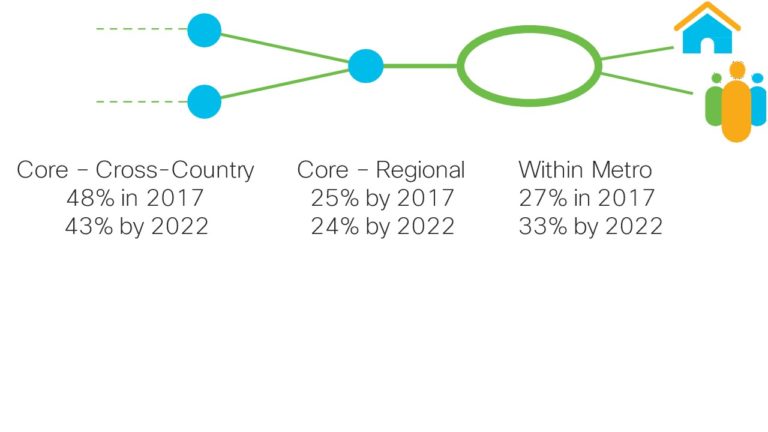

Figure 1: Annual growth in Internet trafficContent Delivery Networks (CDNs) products are designed to address the needs of content publishers for greater QoE. CDNs will carry traffic closer to the end-user, but presently much CDN traffic is deposited onto regional core networks. However, the metro-capacity of the service provider networks is growing faster than core-capacity and will account for a third or 33 percent of total service provider network capacity by 2022, up from 27 percent in 2017 (Figure 2). A CDN node deployed inside an ISP or operator network can bypass congestion points in the network that choke the traffic and restrict throughput. For an ISP or operator, on the other hand, installing a third-party CDN appliance inside the network reduces fees introduced by the asymmetric flow of traffic (e.g. video towards consumers) across the peering point. Most CDNs are deployed in a hierarchical fashion, with multiple levels of caching.

Figure 2: Service Provider capacity moving closer to the edge. Over one-third of capacity will bypass core completely by 2022

Figure 2: Service Provider capacity moving closer to the edge. Over one-third of capacity will bypass core completely by 2022The answer to the question of whether there are a QoE advantage hinges on whether low latency access to consumers from an edge location offers benefits vs. streaming from a more centralized location. The question does not have an obvious answer because of the way video streaming works. Today, most consumer video is delivered using a technique known as "HTTP Adaptive Streaming" (or "HAS" for short). In HAS, a client requests video via "chunks" of variable byte size but fixed (3 -16 seconds) playout time. The size of the chunk is selectedadaptively, from a variety of codec rates, based on the predicted condition of the network between the streamer and the client. The art in HAS consists of accurately predicting the network throughput to select the highest quality codec rate (e.g. largest bitrate) that the network can accommodate. Many algorithms exist that attempt, with varying degrees of success, to build predictive ability in the video streamer. It is not well understood the degree to which low latency benefits the success of these algorithms.

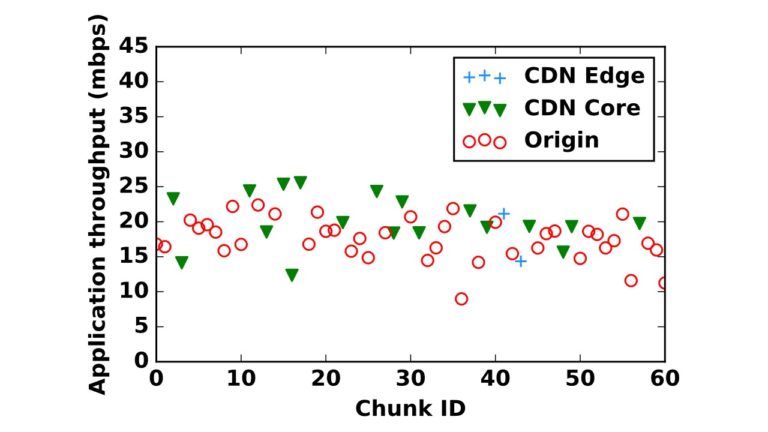

The project conducted measurements of video sessions of popular video publishers that used well-known CDNs from well-provisioned Internet hosts. An interesting aspect of the methodology was the use of publicly visible HTTP headers that show the distribution of objects among the various levels in the CDN hierarchy. For each chunk in a video session, the measurements characterized wherein the CDN hierarchy (or origin) the chunk is served from, the application-perceived throughput, the chunk size, and the latency incurred before the first byte of each chunk is received.

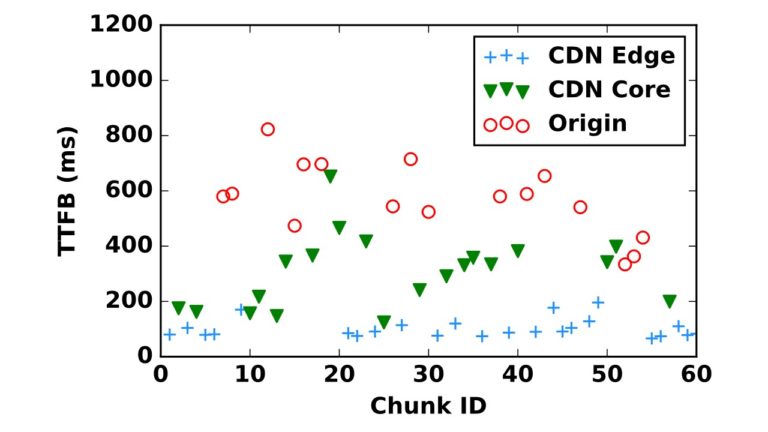

Figure 3

Figure 3While one would expect large chunks of popular videos to be served from a closer location, and chunks of less popular videos to be served from further locations, the results showed that somewhat surprisingly, chunkswithin the same sessionmay be served from different locations. Figure 3 illustrates this for an example video session from one location. Each point indicates one chunk, and the different color legends indicate where the chunk is served from (origin, or a given CDN layer). The Y-Axis indicates the time taken for the first byte of the response to be received in milliseconds (Time to First Byte or TTFB). Notice the significant variation in the first-byte latency across chunks based on the serving location. The results showed that while there is a higher tendency for chunks at the start of a session to be served from edge locations (possibly because users more frequently view earlier parts of the video), consecutive chunks are often served from different locations in both the initial and later stages of a session.

A key question is the impact of these latency differences on application-perceived throughput, which directly impacts the performance of streaming video applications. The results show a clear impact, but also indicate the results are nuanced and highly sensitive to the client location, CDN, and the time of the day.

Figure 4

Figure 4

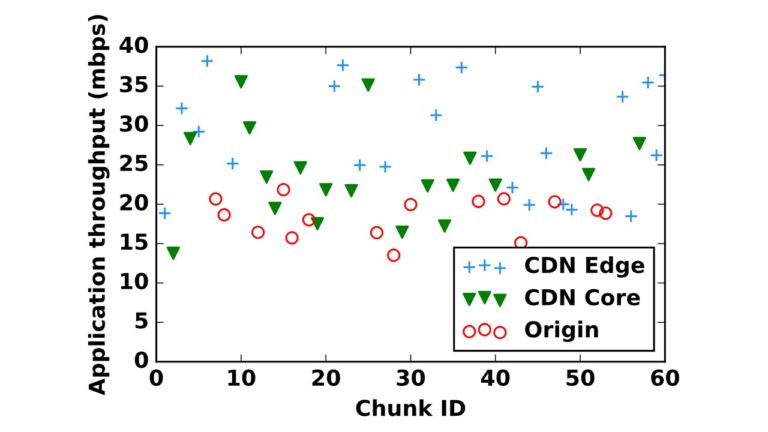

Figure 5

Figure 5The figures above illustrate the issues for two different video sessions served from the same client location and the same CDN, but at two different times. Each figure shows where each chunk in each of the sessions is served from and shows the throughput perceived by the application for the chunk. The graph in Figure 4 shows fairly strong variations in throughput based on the serving location, while the graph in Figure 5 does not. A number of factors explain such variations in trends, including the size of the chunks, whether the throughput bottleneck is between the edge server and the client, or between the CDN edge and CDN core or origin (note that the bottleneck may vary across locations, and across time for the same location), and whether certain optimizations for large file transfer are enabled by the CDN.

How do these results translate to the video quality of experience? First, observe that the results depend on the maximum video bitrate used. For instance, for the session corresponding to the Figure 4 above, while there are significant throughput differences based on serving location, these differences are most relevant for emerging high bitrate 4K video -for lower bitrate video, throughput from all locations is sufficiently high that video experience is unlikely to be impacted.

Figure 6

Figure 6

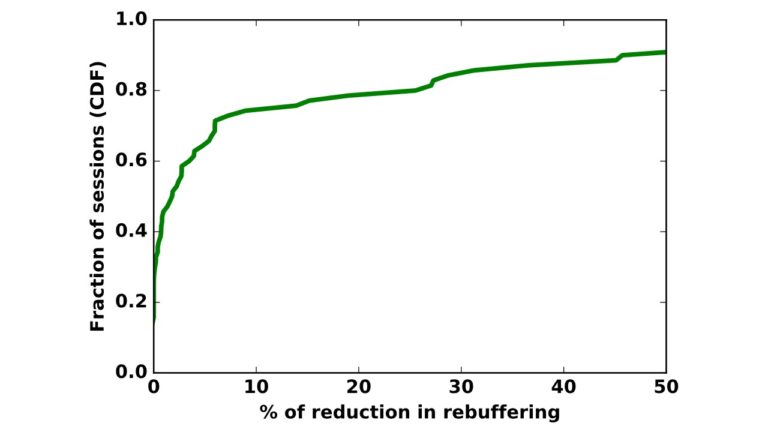

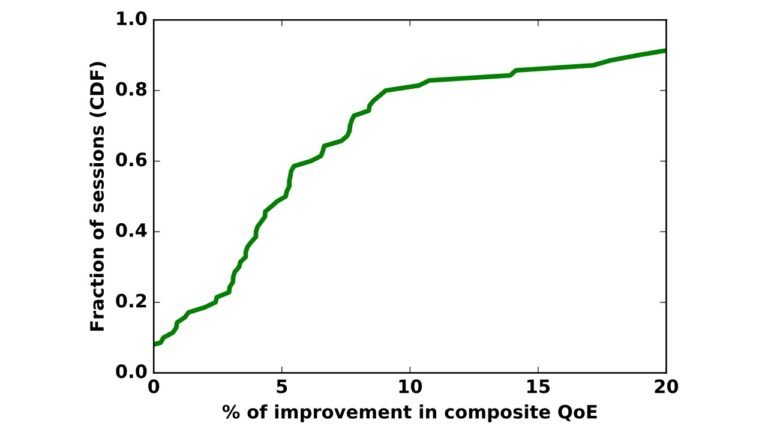

Figure 7

Figure 7The results also have important implications for Adaptive Bit Rate (ABR) algorithms for video streaming. A key challenge with ABR algorithms is the need to accurately predict throughput. Prediction errors can lead to poor bit rate adaptation decisions. ABR algorithms today are agnostic as to whether an object hits or misses in the CDN, and wherein the hierarchy they are served from, which can potentially lead to prediction inaccuracies. The project has also evaluated the potential benefits of CDN-aware schemes that expose information about where the next video chunk is served from to the client by suitably altering a representative and state-of-the-art ABR algorithm.

Experiments on an emulation testbed show that incorporating CDN awareness reduces prediction error for 81.7% of sessions by 17.16% on average. The figures above show the resulting benefit for video experience. Figure 6 shows that many sessions see reduced rebuffering, with a reduction of more than 25% for 20% of the sessions. Figure 7 shows that a well-accepted metric (combining multiple factors such as rebuffering experienced at the client, the bitrates selected, and the fluctuation in bitrates ) that captures video Quality of Experience (QoE) is increased by more than 10% for 20% of the sessions. Overall, these results show the potential of edge deployments to enhance the quality of experience of video streaming but also indicate that fully tapping into the benefits may require greater synergy between the client algorithm and the edge server.

In summary, edge networkingcontinues to gain more intelligence and capacity to support evolving network demands and superior network experiences. Increasingly, global service providers are making networking investments and architectural transformations to bolster the capabilities at the network edge. Based on the Cisco VNI analysis, 33 percent of global service provider network capacity will be within a metro network by 2022 (up from 27 percent in 2017). Comparatively, 24 percent of global service provider network capacity will be in regional backbones by 2022 (down from 25 percent in 2017) and 43 percent of global service provider network capacity will be in cross-country backbones by 2022 (down from 48 percent in 2017).

Cisco sponsors academic and institutional research through its Research and Open Innovation initiative. Research, funded through gifts direct to the Institution, is designed to be openly available and expected to lead to publication in journals or conference proceedings. Cisco strives for its grants to have maximal impact and so tends to focus on research with broad industry appeal. In this blog, we have summarized some recent results from a Cisco-funded project at Purdue University that are particularly relevant to edge computing.

Hot Tags :

Service Provider

5G

Cisco VNI

cdn

content delivery network

multi-access edge computing

CDN Caching

Hot Tags :

Service Provider

5G

Cisco VNI

cdn

content delivery network

multi-access edge computing

CDN Caching