This blog continues Cisco's IP for Broadcasters blog series where we explore topics of interest to media companies and broadcasters that are transforming their strategy, network and capabilities. The current global pandemic has sped many SDI to IP transitions projects. Check out the series that offers field-proven experience to help guide you through the transition and achieve your goals, fast. Here we will explore buffering basics for broadcast engineers with tips to reduce jitter and lower latency.

One of the most common topics of concern for media and entertainment companies is the use of deep buffers on network switches. It's a popular topic because it's highly controversial. Why? On top of deep buffers costing more money, buffers can also add increased latency and reduced performance when used incorrectly. With cost and performance being the two greatest factors when designing broadcast and media networks, this can lead to unexpected roadblocks. This blog digs into buffers and how they apply to media applications with uncompressed media over IP.

Do you really need large buffers in switches to get "optimal performance?" While there are instances where large buffers are required, or a good idea, using them for some workflows can add latency and reduce performance. With certain environments, especially multicast, it would appear as though large buffers are in fact being used to mask shortcomings with switch ASICs, or systems.

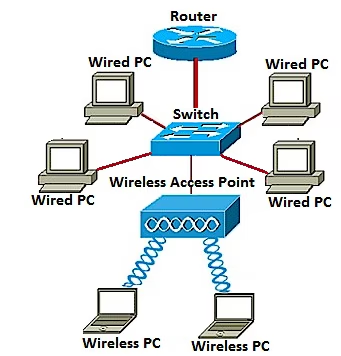

A buffer is a finite piece of memory that is located on the networking node. In case of congestion, packets will temporarily sit in this buffer until it's scheduled to leave the interface. Buffers are needed to handle interface speed conversions, traffic bursts, and many-to-one communication.

Figure 1 -Buffer types

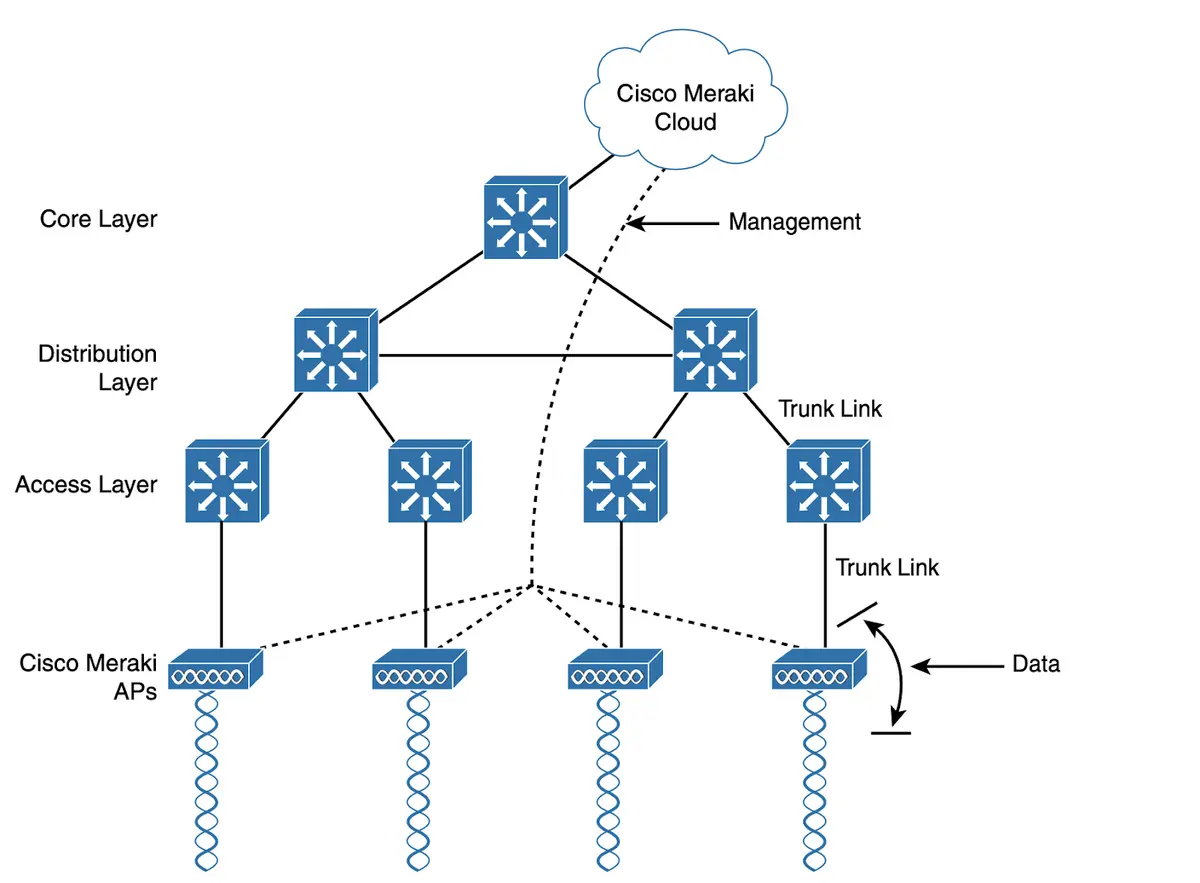

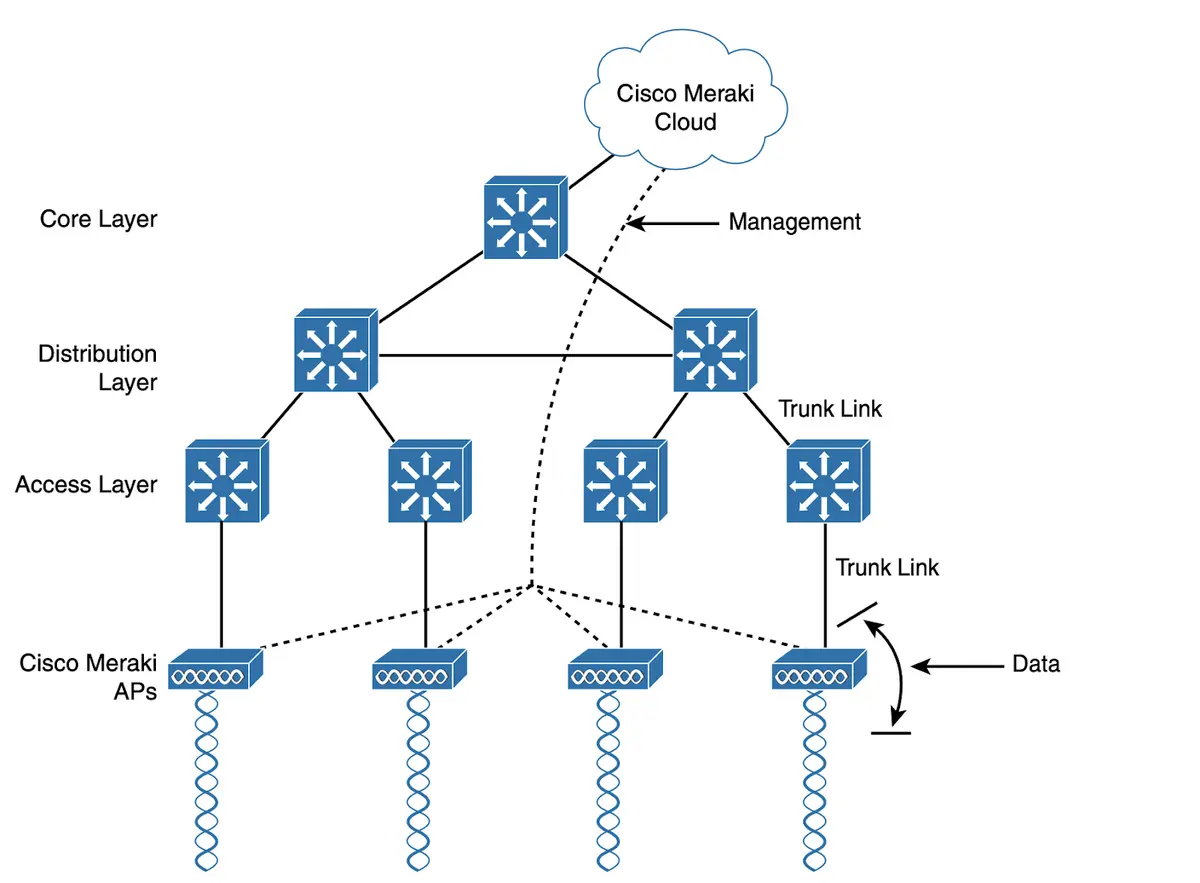

A queue is a logical separation of traffic within a buffer that, through scheduling, controls the order at which different queues leave the network buffer based on schedule priority. Here I will focus on two queuing architectures most present in media deployments: Virtual Output Queue (VOQ) with ingress and egress buffer and Output Queuing with Egress buffer.

With VOQ, 90-98 percent of the buffer is allocated to the ingress ports, and 2-10 percent shared buffer is used by egress ports. VOQ is typically used in "Deep Buffer" switches. In this model the ingress buffer is divided into VOQs to simulate egress buffer and prevent Head of Line Blocking (HoLB).

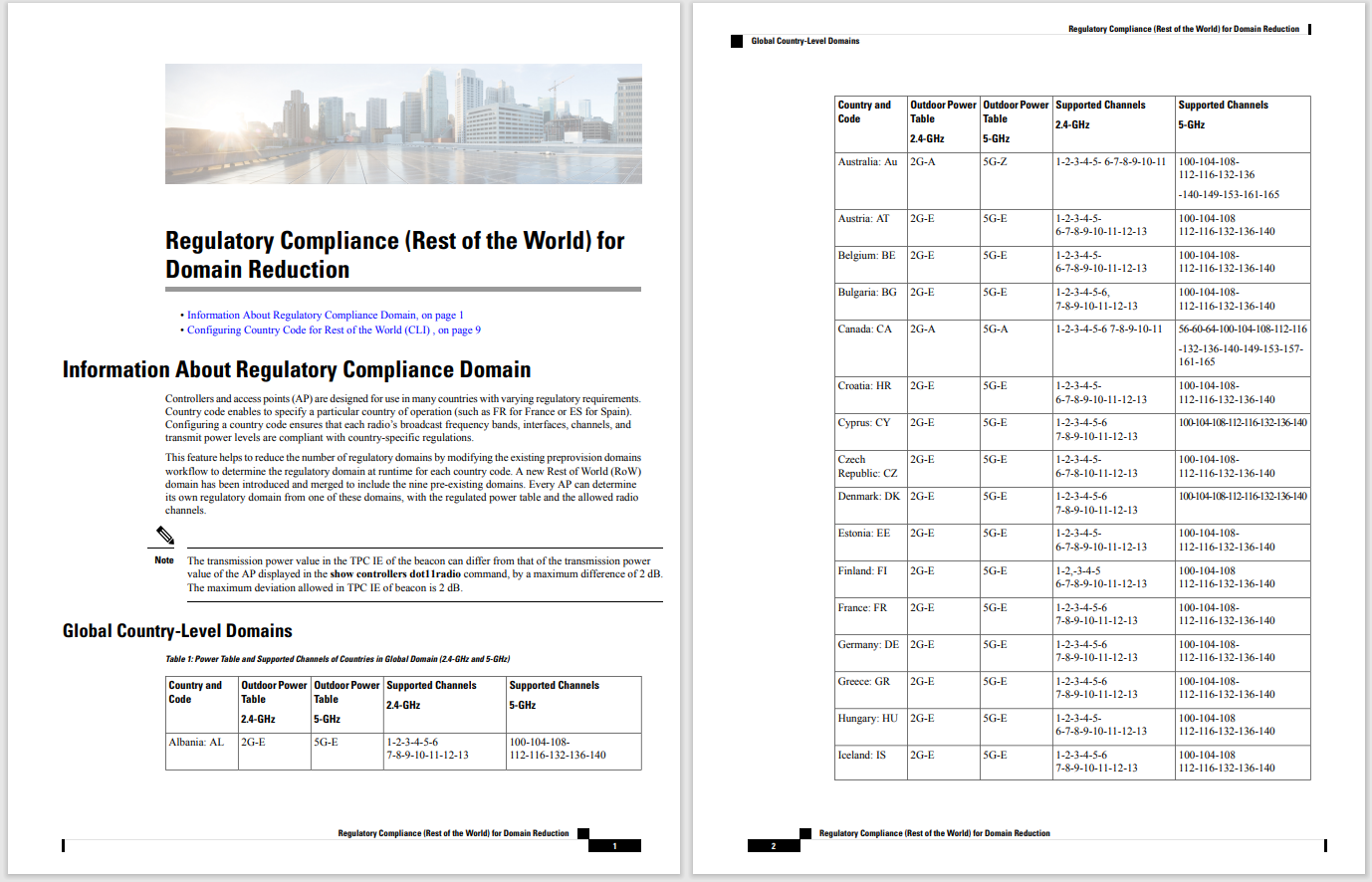

Because unicast traffic is a 1:1 relationship, ingress VOQ buffers become useful. As such we can buffer the unicast traffic until the egress port is ready to receive it and place it in separate queues. Multicast, however, is a 1:N relationship, so if we do place multicast into an ingress buffer and in a VOQ corresponding to a multicast group of a receiver, and even if one of the receiver ports is congested, all other receiver ports will be impacted. That's because traffic is coming from a single queue and is replicated in egress line card(s) which can cause streams to arrive delayed packets to be dropped, and other negative effects.

You could overcome this if you were to treat multicast like unicast. If the ingress line card creates one copy of the group per egress receiver, by replicating traffic in ingress line cards for all receivers you could then place each copy into the VOQ buffer and treat it like unicast. However, as we know with SMPTE ST 2110 and other large bandwidth flows, this would oversubscribe the crossbar very quickly and cause packets to be dropped.

Because of the above reasoning, we treat multicast a little differently in VOQ. There exists a set number of queues for multicast in the egress buffer. All multicast is placed in these queues, buffered in case of congestion, and scheduled on the port toward receivers to avoid blocking and reducing delay for other receivers in the same group.

SMPTE ST 2022-6, ST 2110, ST 2059 may be affected by HoLB and impact multiple streams

Figure 2 -Ingress buffering for broadcast traffic -Head of Line Blocking

Buffer is shared between egress ports for unicast and multicast traffic. The output queue buffer is usually dynamically allocated to ports under congestion to maximize traffic preservation. Since every port has N queues, no HoLB for unicast or multicast occurs. Take a look our Cisco's Cloud Scale ASIC to see our take on egress buffering.

SMPTE ST 2110, ST 2022-6, and others are multicast traffic streams that require low latency and low jitter (changes in latency). Jitter can be introduced by buffering, where packets are delayed by congestion. With this in mind, we can start to look towards how much buffer is needed for our use case.

Real-time multicast traffic should be forwarded immediately without buffering for long periods of time. This is to avoid causing additional latency and higher jitter. Switches should have enough buffer to absorb bursts of traffic. Using a dynamically shared buffer architecture allows flexible use of buffer during burst and congestion for optimal results.

In order to guarantee minimal latency and jitter, we can use QoS to protect our real time media traffic.

We can place live production traffic in strict and high-priority queues. This would ensure that they are dequeued ahead of all other traffic. Using QoS, we can onboard file-based workflows into the same network and have them take a lower priority queue.

It's important to think of your network architecture when considering where you need buffering. In a spine/leaf topology, the majority of your buffering requirements exist on the leaves. This is where you're doing speed conversion (10/25/40/100GE) and congestion is more likely to occur. This is a good location for your egress buffer. On the spine, traditionally all interfaces are the same speed and are less likely to experience congestion. Either a VOQ or shared egress buffer will work for a spine, as both can buffer at egress and do a good job when speed conversion isn't required. Check out our IP Fabric for Media design guide to see some supported network architectures.

The use of deep buffer switches in real-time multicast media is unnecessary because we now know that multicast traffic does not use ingress buffers to avoid HoLB, but instead uses smaller egress buffers to optimize for multicast traffic and replication. The correct architecture for the solution is to use a properly sized, shared egress queue buffer, and to place our real-time media into the highest priority queue, and other traffic into lower priority queues. Using this proper QoS design, we can ensure our live traffic is never impacted due to congestion, or by any other traffic.

Check out the Cisco Portfolio explorer to gain additional insight into how Cisco can help broadcasters and media companies accelerate ideas to audiences.

Hot Tags :

Cisco Media

Cisco IP Fabric for Media

Hot Tags :

Cisco Media

Cisco IP Fabric for Media